This deluge of reading is worsened for paper-reviewers, who not only have to read very difficult papers, but also have to read closely, check their validity, and write reviews. Today we read about how to deal with a deluge of papers to review. Today's paper is presented in a video too:

Avoiding a Tragedy of the Commons in the Peer Review Process (2018), by D. Sculley, Jasper Snoek, Alex Wiltschko.

the massive growth in the field of machine learning ... threatening both the sustainability of an effective review process and the overall progress of the field.

we propose a rubric to hold reviewers to an objective standard for review quality. In turn, we also propose that reviewers be given appropriate incentive. As one possible such incentive, we explore the idea of financial compensation on a per-review basis. We suggest reasonable funding models and thoughts on long term effects

Too much to review in Machine Learning

In the last year alone, the number of conference paper submissions needing review has increased by 47% for ICML, by 50% for NeurIPS, and by almost 100% for ICLR. This exponential growth rate has undoubtedly strained the community...

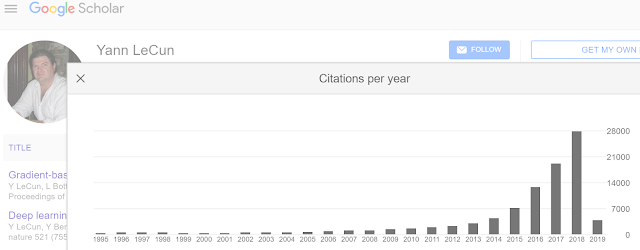

We are in an exponential growth phase of ML research. This can easily be seen by looking at citation number of a big-name in ML like Yann LeCun:

There's a threat of the Tragedy of Commons. Reviewing is a payless work by scholars, as their community service. Thus, time spent on reviewing is a kind of "free common property", and researchers might overuse this property.

Review quality has gone down:

Indeed, we may already be at a breaking point. In mid-2018 we looked at hundreds of past NeurIPS and ICLR reviews to find high quality reviews to use as exemplars in an updated reviewer guide... but were surprised at the difficulty of finding individual reviews that [were fully satisfactory].And it's not very reproducible.

The NeurIPS Experiment of 2014... found that when papers were randomly re-reviewed by independent committees of reviewers within the NeurIPS reviewing process, almost 60% of the papers accepted by the first committee were rejected by the second.Solution:

- Standardize review process so that review quality goes up.

- Reward reviewers for good review.

Scoring reviews

The authors proposed a 7-point scoring standard for a good review. They are quite common-sense and I'll not type them out here.Rewarding reviews

Approximate price: 1000 per review.

What is a reasonable level of compensation? ... we would suggest a figure of USD 1000 per paper per review as a reasonable value of compensation. We arrive at this figure by noting that a complete review including reading, understanding, verifying, and writing should take at least 4 hours of attention from a qualified expert in the field. Assuming a rate of USD 250 per hour as a conservative estimate for consulting work done by experts in machine learning in the current market...It has been done in other fields:

... other fields do incorporate compensation into their peer review and publication processes... the National Institute of Health (NIH), a major government funding agency for research in biology and medicine, pays an honorarium for peer review activities for grants... Neither the presence of paid editorial oversight nor compensated peer review have been raised as impediments to objectivity in the fields that employ them.It won't be cheap to review 15000 papers for a single conference:

...on the order of USD 15 million for a conference like NeurIPS 2018.Differential pricing (selling the same thing at different prices to different consumers) could help:

NeurIPS 2018 famously sold out within roughly 6 minutes of registration opening.. demand far exceeds supply... we suggest to offer differential pricing, for example charging significantly more for industry-affiliated attendees than for academic researchers or current students... imagine a world in which industry-affiliated attendees were charged a figure on the order of USD 5000. This would provide a budget of the correct magnitude. As beneficial side effects, it may also mitigate some of the registration crunches and provide a mechanism to further promote inclusion and diversity at registration time.It would solve budgeting and promote diversity.

Another idea is co-pay (raising prices so that consumers don't use up a cheap resource):

In this setting, paper submission would entail a fee which would both be used both to collect funds to help offset reviewing cost, while also providing a disincentive for authors to submit work that is not yet fully ready for review.Since different people have different income level, a monetary co-pay plan might not work well. As an alternative, we can let people pay in reviews: review a paper to get your own paper reviewed.

Sponsorship. It should work, but might introduce too much bias:

Industry sponsorship currently provides more than USD 1.6 million to NeurIPS to fund various events. Given the value of machine learning research to the industrial world...We believe it may be reasonable to create sponsorship opportunities to help fund review costs... because of the possibility of perceived bias toward sponsoring institutions, we consider direct sponsorship a less desirable option.Triage. There's a custom that every paper gets quality review, even if it's garbage. This should change so that only good papers get quality reviews:

Multi-stage triage that quickly removes papers far below the bar may reduce the reviewing burden substantially, while improving the ability of reviewers to dive deeply into the details of higher quality papers. This is common practice in journal-centric fields like biology and medicine...

Possible long-term effects

... peer reviews are unfunded and are often distributed to exactly those people who are least economically advantaged... such as students nearing completion of their PhDs who are currently required by tradition to provide this value for free.Paying them for reviews would help their finances and be better scholars.

Paying for reviews could also professionalize reviewing. Instead of letting generic researchers do reviewing on the side, some researchers can specialize in reviewing, making reviews of higher quality.

In a system in which reviewers may actually prefer to review more papers in order to increase their compensation, we may end up with reviewers deciding to take on 10 or 20 reviews. Such reviewers may have better perspective than is currently typical, and may be more efficient on a per-review basis when reviewing many papers on a similar topic.

No comments:

Post a Comment